Agent Failure Modes: Compounding Errors and Invisible Failures (AI Integration 3/4)

The Sophistication Paradox

Recap: In the previous post, we established that LLMs need agents—systems that can interact with environments and maintain verification loops. But here's where the story gets interesting: as agents become more sophisticated, capable of orchestrating complex multi-step workflows, more things can go wrong and the system's absolute performance often remains surprisingly low.

The conventional wisdom says that more sophisticated agents mean better results. Everyone's excited about systems like OpenAI's Operator and Deep Research agents that can autonomously navigate GUIs and synthesize information from multiple sources. These systems look incredibly impressive in demos. But when we examine their actual performance data, where it's available, a different picture emerges.

This isn't just about current limitations that will be solved with the next model release (though it's likely that many improvements will come). It's about a fundamental design challenge: the more degrees of freedom a system has, the harder it becomes to verify its correctness. And without verification, sophistication can become a liability rather than an asset.

Compounding Errors: When Steps Multiply Problems

Along with other tools, OpenAI's Operator represents the current state-of-the-art in graphical user interface (GUI) automation agents. Rather then operating in text environments, Operator moves the mouse around the screen, presses buttons and simulates keyboard button presses. While Operator itself is proprietary, we can understand how these systems work from open-source analogs like UI-TARS. Here's the typical workflow, fitting the definition of an agent as “LLM wrecking it’s environment in a loop,” and making use of it’s agentic capabilities like planning and tool use (see my previous post for more details on these).

The GUI Agent Workflow:

Take a Screenshot (Input): The agent captures the current state of the application or webpage

Analyze the Screenshot (Vision Model): A vision model interprets the UI elements, identifying buttons, text fields, and interactive components

Decide on the Next Action (Planning): The system determines what action to take based on the user's goal and current state

Execute the Action (Tool Use): The agent performs the action—clicking, typing, scrolling—using automation tools

Observe the Result (Loop): The process repeats until the task is complete or fails

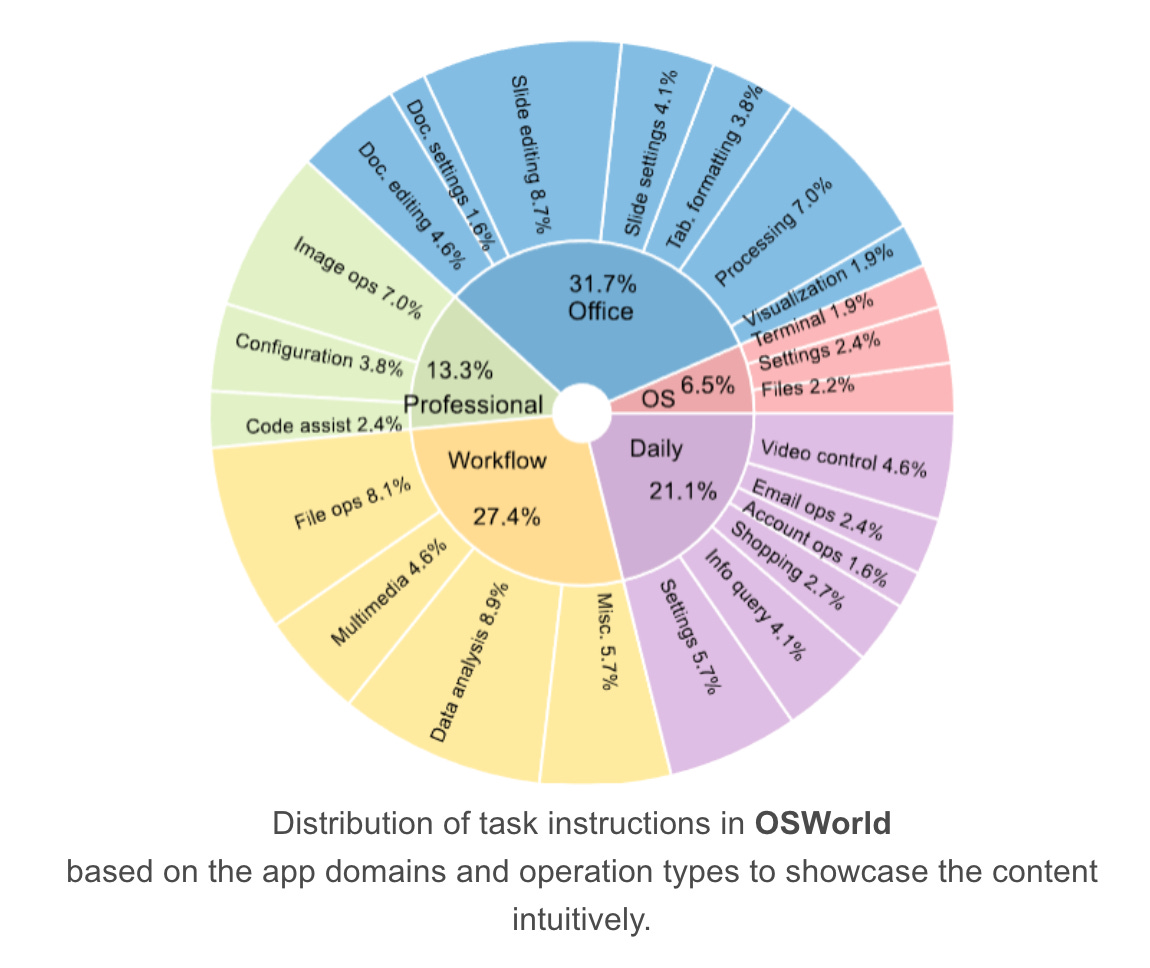

The concrete performance data reveals the challenge. On OSWorld, a benchmark testing real computer tasks, even the best-performing systems achieve modest success rates in absolute sense:

UI-TARS: 22.7% success rate

Claude 3.5 Sonnet: 14.9% success rate

These numbers aren't just about current model limitations. The challenge isn't just the individual steps—modern vision models are quite good at interpreting screenshots and modern automation tools can reliably click buttons. A big challenge is error compounding across multiple steps in high-degrees-of-freedom environments — you need to succeed many times to succeed at the task, but you only need to hit a dead end once to fail at the task.

Strategic Use Cases:

Sayash Kapoor's analysis of Operator's expense report automation attempt reveals a useful framework. GUI agents work best in specific sweet spots:

Easy, high-stakes tasks: Where traditional automation already exists and works better

Complex, low-stakes tasks: Where performance doesn't hurt and incorrect cases are easy to correct

Medium difficulty, workable with oversight: The current practical zone—tasks complex enough to benefit from AI but manageable enough for human verification

In contrast, GUI agents currently fail in these scenarios:

High-difficulty tasks (complex software testing, nuanced decision-making)

Long workflows where early errors cascade through multiple steps

Situations where the cost of automation errors is high but verification is difficult

I think a big part of the issue is that GUI agents face a multiplicative failure challenge. If each step has a 90% success rate, a 5-step workflow drops to 59% overall success, and a 10-step workflow plummets to 35%. With Operator's real-world performance, the compounding effect is even more severe. A single misclick, misinterpretation of UI elements, or timing issue early in the workflow can derail the entire task, requiring human intervention to restart from scratch.

Invisible Failures: When Wrong Looks Right

Deep Research agents (such as OpenAI or Google’s) tackle a different challenge: synthesizing information from multiple sources to answer complex questions. The workflow looks something like this.

The Deep Research Workflow:

Receive a Research Query (Input): User provides a question or research topic

Formulate Search Queries (Hidden Prompt): The agent breaks down the question into searchable components

Retrieve Information (Tool Use): Multiple searches across different sources and databases

Summarize and Synthesize (Hidden Prompt): The agent processes results into coherent findings

Verify and Cross-Reference (Hidden Prompt): Check for inconsistencies and identify gaps

Draft a Report (Hidden Prompt): Compile findings into a final research document

Unlike Operator, Deep Research agents suffer from what we might call the "invisible failure problem." When Operator fails to book a flight, you know immediately. When Deep Research produces a plausible-sounding report with subtle errors, incomplete coverage, or biased source selection, the failure is much harder to detect.

The Task-Dependent Performance:

Sayash Kapoor's systematic discussion of OpenAI and Google's Deep Research qualitatively compares the performance across different categories of tasks:

Factual Data Collection (Poor Performance):

Up-to-date factual queries like "which NBA players are over 30 years old?" often fail

Recent information competes with training data, leading to incorrect answers

Temporal and numerical reasoning remain weak points

Semantic Search for Research Examples (Strong Performance):

Collecting relevant examples for research projects works well

Verifying outputs is easier than finding items from millions of options

Can significantly reduce time spent on literature surveying

Particularly effective when combined with human judgment for filtering

Case Study Analysis as "Intuition Pump" (Contextual Value):

Impressive for analyzing recent literature and generating insights

Works well when factual correctness isn't the primary goal

Valuable for informing thinking rather than providing definitive answers

To me, the real problem isn't that Deep Research agents sometimes get things wrong—human researchers do too. The problem is that you can't verify the intermediate steps. Was the search strategy optimal? Were the most important sources identified? Did the synthesis accurately represent the source material? These questions are much harder to answer than "did the email get sent?"

What Really Works?

The failure modes we've explored—compounding errors and invisible failures—reveal something important about these systems. Both Operator and Deep Research represent a step forward in AI workflow integration, moving beyond isolated AI interactions in a browser toward true agentic systems that can orchestrate multi-step processes. This aligns with the vision I've outlined in previous posts about economists becoming more agentic in their AI use.

But here's the limitation: these systems are designed for general-purpose use, not for the specific workflows that economists actually need. Deep Research comes closest to economics research, but it aims for a general research workflow that inevitably falls short when applied to specific economic applications—whether that's empirical analysis requiring particular datasets, theoretical work demanding specific citation standards, or policy analysis needing domain-specific expertise.

When you design for everyone, you optimize for no one. Operator works on any interface but exploits the specific features of none. Deep Research handles any research question but lacks the domain knowledge that makes research actually useful. Both systems pay what we might call a "generality tax"—they sacrifice performance on specific tasks to maintain broad applicability.

Where Should Economists Look?

There's another model emerging from the one domain where AI integration has already been genuinely transformative: software development. Programmers haven't solved AI integration just by building more sophisticated general-purpose agents (though it's definitely a part of the story). They are solving it by designing domain-specific systems where automatic verification is possible, where human verification is decomposed into manageable chunks, and where constraints actually enhance rather than limit capability.

The coding analogy isn't perfect, but it's instructive. Programmers have figured out how to build AI workflows that fit their actual work—not generic systems that sort of work for everyone, but specific tools that work exceptionally well for their domain-specific needs.

Preview: In the next post, I will go into the details of AI coding workflows I believe economists can learn from. I will also share what I learned from my first full AI-integrated workflow in a historical data digitization project, as well as how moving from ad hoc AI testing to systematic workflows revealed principles that extend far beyond coding to reliable AI integration in economics research.